Canary Deployments, A/B testing, and Microservices using Edge Stack

Canary deployments are a popular technique for incrementally testing changes on real-world traffic. In a traditional application, canary deployments occur on the granularity of the entire application. This limits the utility of canary deployments, as a single feature cannot be tested against real-world traffic.

With a microservices architecture, this is no longer the case. A single service team is able to test their updates with real-world users.

Unlike a monolith, a microservices team is able to:

- test multiple versions of their service simultaneously

- control which versions of their service are being tested and when

With these capabilities, a service team is able to not only conduct canary deployments, but run multiple different versions of their service for A/B testing, validating specific fixes, and so forth. The key to enabling these use cases is a Layer 7 reverse proxy.

Reverse proxies and Layer 7

A reverse proxy capable of managing Layer 7 traffic is necessary to support these capabilities. Incoming traffic is routed through the reverse proxy, which then routes traffic to different versions of a service.

In this example, we'll use the Envoy Proxy. Envoy is a very lightweight, high performance proxy designed for modern microservices architectures. Configuring Envoy, however, is a complicated exercise given the breadth of its features and complexity of configuration. Thus we'll use Edge Stack, which is a Kubernetes-native open source API Gateway built on Envoy. Ambassador provides a sophisticated solution for Kubernetes ingress.

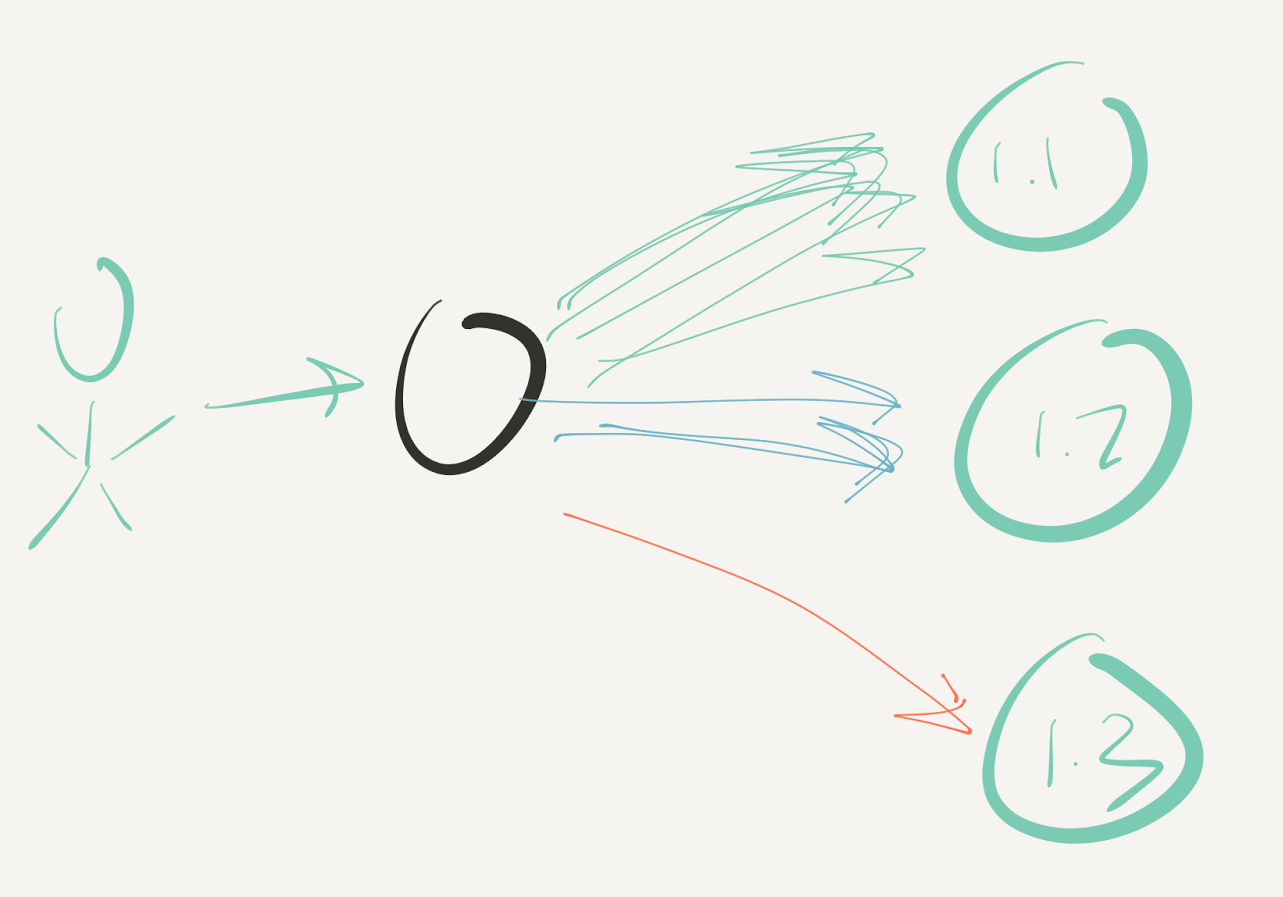

The picture below illustrates how an end user sends traffic to a L7 reverse proxy, which can then route that traffic to different versions of the service depending on criteria. For example, the proxy could route between a stable 1.1 version and a canary 1.2 version based on a weighted round robin policy, while sending only authenticated developer requests to the development 1.3 version.

Deploying a canary

Ambassador is configured using Kubernetes annotations. We've already seen this dynamic configuration at work when we deployed a service that dynamically registered a URL with Ambassador. Now, we'll use that same workflow to configure Ambassador to route different percentages of traffic to two different versions of the service.

1. We'll start by deploying the same example service as we've done previously. This deploys a

hello-world-stablegit clone https://github.com/datawire/hello-world-pythoncd hello-world-pythonforge deploy

2. Now, let's make a change to the

app.pydef root():return "Hello World Canary! (up %s)\n" % elapsed()

3. We're going to deploy this change as a canary. We do this by specifying using the

canaryservice.yamlweight: 10.0forge --profile canary deployTesting the canary

1. You'll see a new service,

hello-world-canarykubectl get services ambassadorNAME CLUSTER-IP EXTERNAL-IP PORT(S) AGEambassador 10.11.250.208 35.190.189.139 80:31622/TCP 4d

2. In order to see the results of this canary, we need to simulate some real traffic. We'll run a loop to issue a stream of GET requests to the service, and see how Ambassador routes between the

stablecanarywhile true; do curl http://$AMBASSADOR_URL/hello/; doneRollback

Sometimes your canary isn't working, and you want to roll back so that all your production traffic goes to the stable version. Rolling back is as simple as deleting the canary deployment.

1. Let's delete the canary deployment and service.

kubectl delete svc,deploy hello-world-canary2. If we rerun our loop, we'll see that traffic goes straight to the

stablewhile true; do curl http://$AMBASSADOR_URL/hello/; doneBut how do you know when to rollback? That topic will be covered in another article in the Guide -- monitoring.